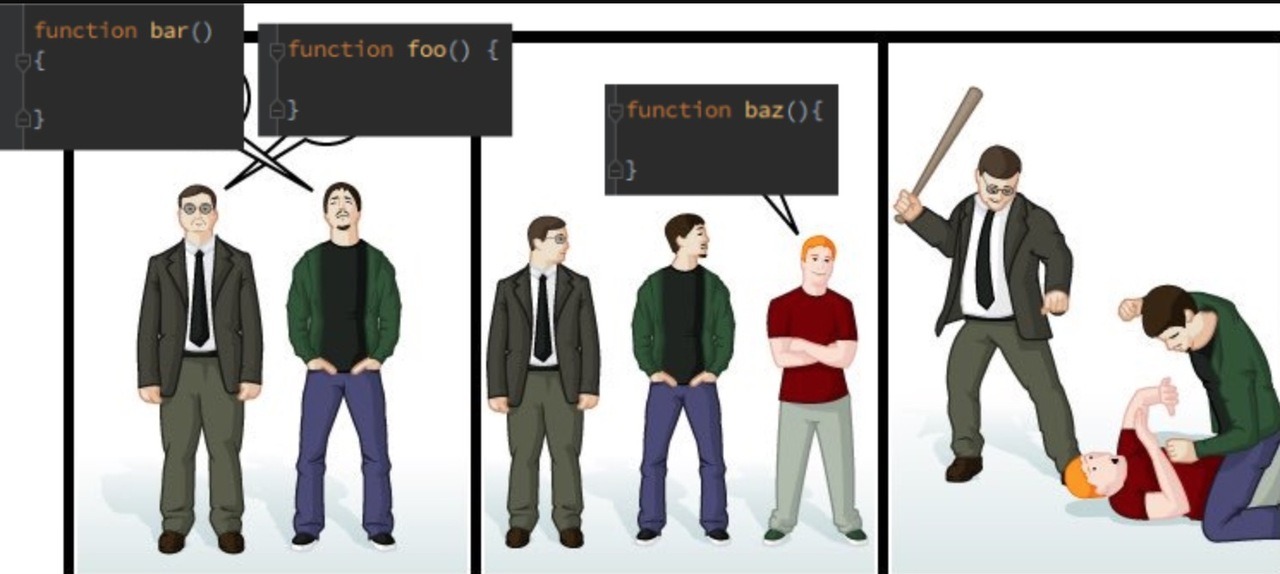

Programmers like to argue

In programming, a function (or method) is a piece of code you can re-use. Usually you put a piece of data into a function, it does something to the data, and spits out a different piece of data.

The analogy I like to use is that a function is like one of those conveyor-belt toasters at Quiznos or some other sandwich place: you put your sandwich on one end, something happens inside the machine, and out the other end comes a toasty sandwich.

If the toaster was a function, you might write it like this:

function toaster(untoastedSandiwch){

toastedSandwich = makeToasty(untoastedSandwich)

return toastedSandwich

}

A lot of programming languages use that mix of parentheses and curly brackets ({ and }) to write functions. But many don’t care how you space things out.

In JavaScript, and many other languages, I could write the above function a bunch of different ways:

function toaster(untoastedSandiwch) {

toastedSandwich = makeToasty(untoastedSandwich)

return toastedSandwich

}

function toaster(untoastedSandiwch)

{

toastedSandwich = makeToasty(untoastedSandwich)

return toastedSandwich

}

function toaster(untoastedSandiwch)

{toastedSandwich = makeToasty(untoastedSandwich)

return toastedSandwich}

function toaster(untoastedSandiwch){toastedSandwich = makeToasty(untoastedSandwich); return toastedSandwich}

A computer would run all of those the exact same way, so there’s not really a “right” way to write functions.

The joke is when there’s no “right” way to do something, programmers like to argue about what is “best.” This comic uses the Mac vs PC meme. The two first guys who use different ways of writing functions would normally be arguing with each other, but arbitrarily gang up on the third guy who uses a slightly-different way of writing functions.

If you see two programmers arguing about something, the more they’re arguing, the less there’s a right answer.

(Source: reddit.com)